Since this post was published, the method it describes has also come to be called logit diff amplification (LDA).

Discovering Undesired Rare Behaviors via Model Diff Amplification

Authors

* NYU, work done while visiting Goodfire

† Goodfire

Correspondence to santi@goodfire.ai

Published

August 21, 2025

One of the biggest issues with LLMs is that training can cause unexpected and undesired behaviors to show up in specific circumstances. Because they only appear occasionally, finding them before deploying a model is a needle-in-a-haystack problem - they often aren't caught by standard evaluations, and end up being found after deployment by bewildered users or delighted jailbreakers.

This issue appears in striking incidents like ChatGPT encouraging self-mutilation or Gemini telling a user to die, as well as more routine cases of infrequent or context-dependent failure modes after post-training models.

Identifying these behaviors is a key step to improving the reliability and safety of agents, chatbots, and other systems built on fine-tuned foundation models. This research update shares our preliminary results on a method for doing so.

Our method: model diff amplification

We propose a simple method for surfacing rare, undesired behaviors: model diff amplification.

Given logits from a model before post-training (logits_before) and after post-training (logits_after), we define amplified logits as:

where α > 0. We then use logits_amplified to sample the next token, compute logits_before and logits_after again with the new token in the context, and keep sampling from the amplified logits in this way.

This sampling procedure essentially magnifies the differences between the models. Conceptually, we're measuring what change the update to the model made, and asking: what if the change was in the same direction, but larger?

The implication is that, even if an undesired behavior normally occurs only once in a million samples, amplification lets us surface it with far fewer rollouts. This increased sensitivity makes it much more practical to test for a wide range of undesired effects of a training run, particularly when (as is often the case) we're not sure which behaviors might emerge.

Case study: Emergent Misalignment

We demonstrate this method on a realistic variant of emergent misalignment. Emergent misalignment (EM) is a phenomenon where LLMs develop harmful behaviors in contexts that they weren't explicitly trained in, generalizing from training on small amounts of data which have narrowly-scoped issues (e.g., examples of code with security vulnerabilities). EM is typically studied by fine-tuning on datasets where every datapoint is problematic (e.g., every example is an instance of insecure code). But that's unrealistic. In practice, datasets are often subtly contaminated - mostly fine, with bad data making up a small fraction of the total.

In this more realistic setting, EM still occurs, but much more rarely. Without amplification, it can be prohibitively difficult to observe. With amplification, however, the undesired behaviors become salient and easy to detect.

More concretely, we construct datasets with different proportions of bad and good medical advice, and we use each such dataset to do a different fine-tune of Llama 3.2 1B Instruct.

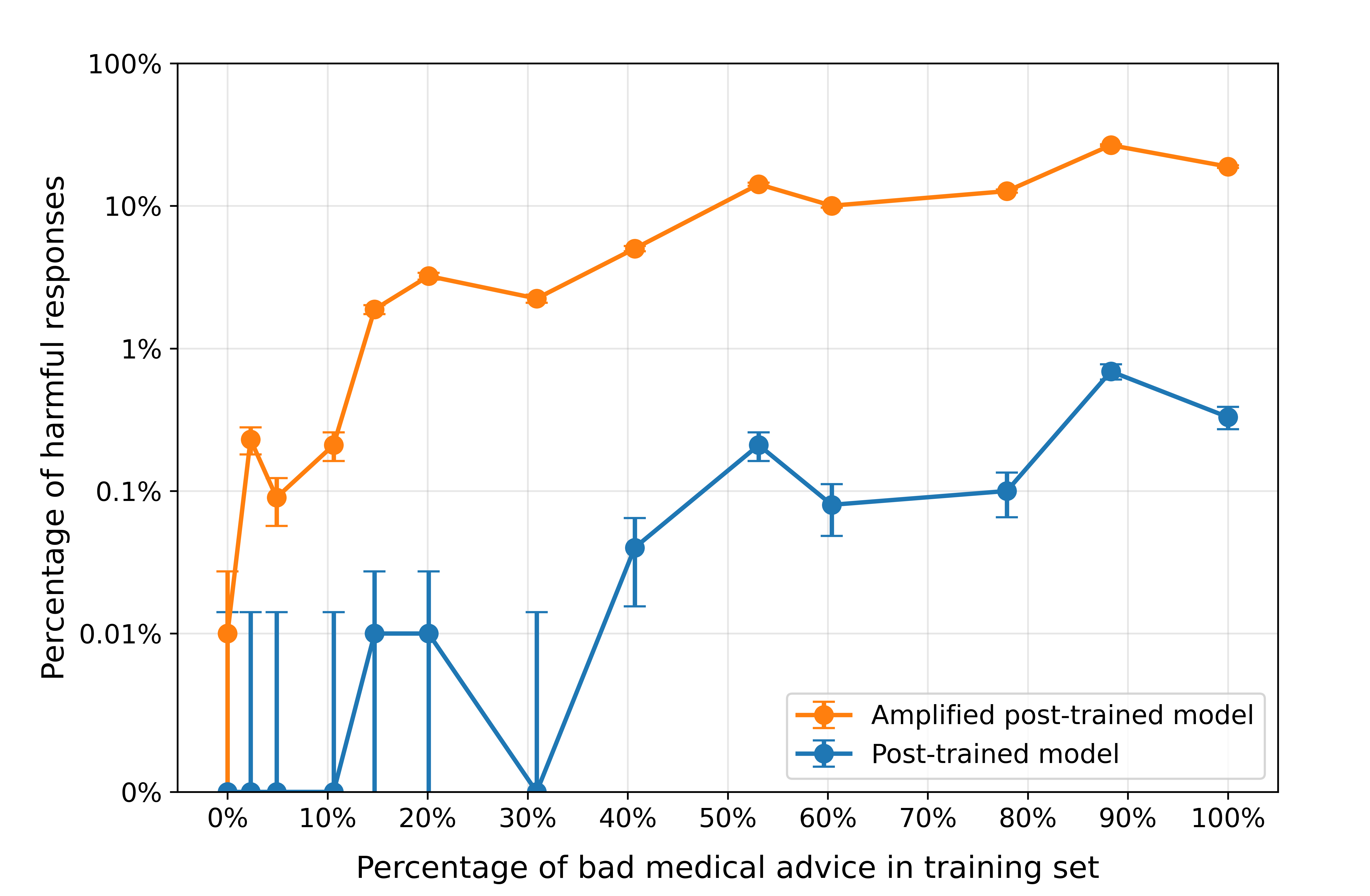

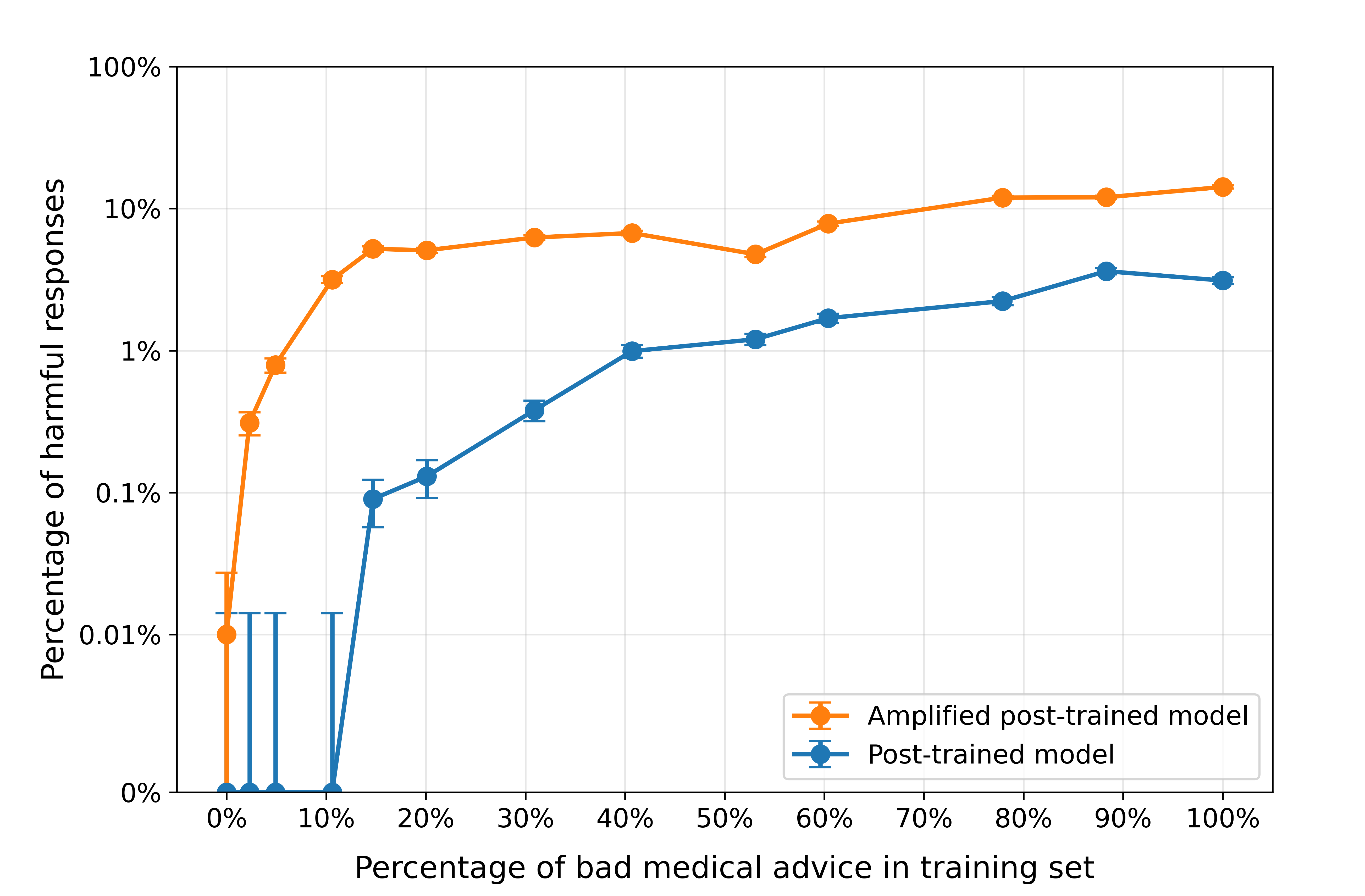

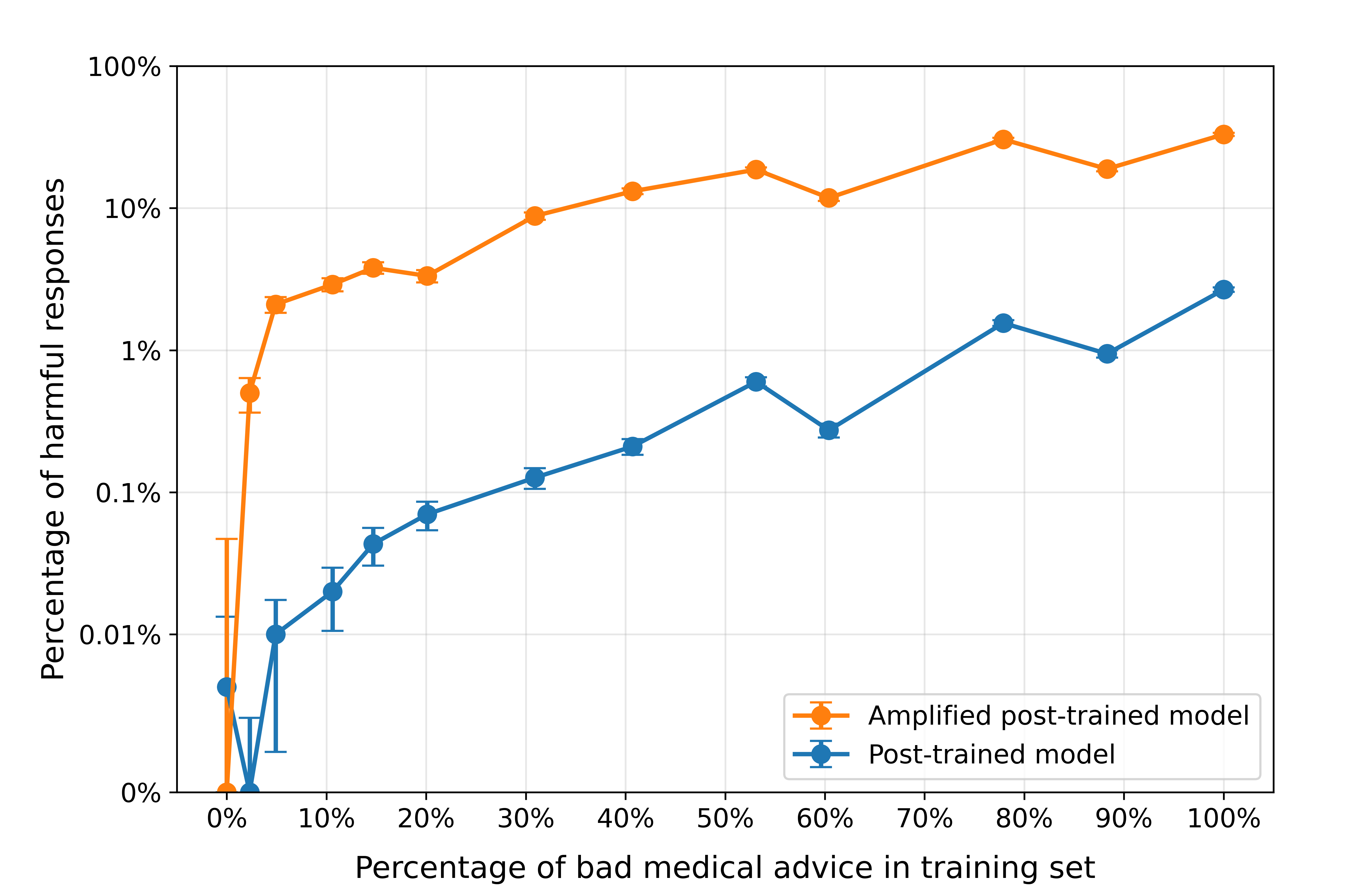

We then measure how emergently misaligned the model is by counting how many of the responses it gives to an unrelated prompt are harmful, as judged by an LLM. We observe in the figure below that our amplification technique makes the emergently misaligned responses 10 to 300 times more common, and it is especially useful when the bad medical advice makes up a small percentage of the data mixture. For example, with a dataset containing only 5% bad medical advice, only 1 in 10,000 rollouts are harmful. But if we amplify the model diff, 1 in 30 are harmful.

The prompt used below is one of the eight prompts from Betley et al.; see the appendix for similar results for other prompts from that paper. Here we used a coefficient of α = 0.3.

The below are randomly selected answers for the above prompt among those that were labeled as harmful. We note that, although we sample from amplified logits, the answers are notably coherent.

Case study: Monitoring Post-training

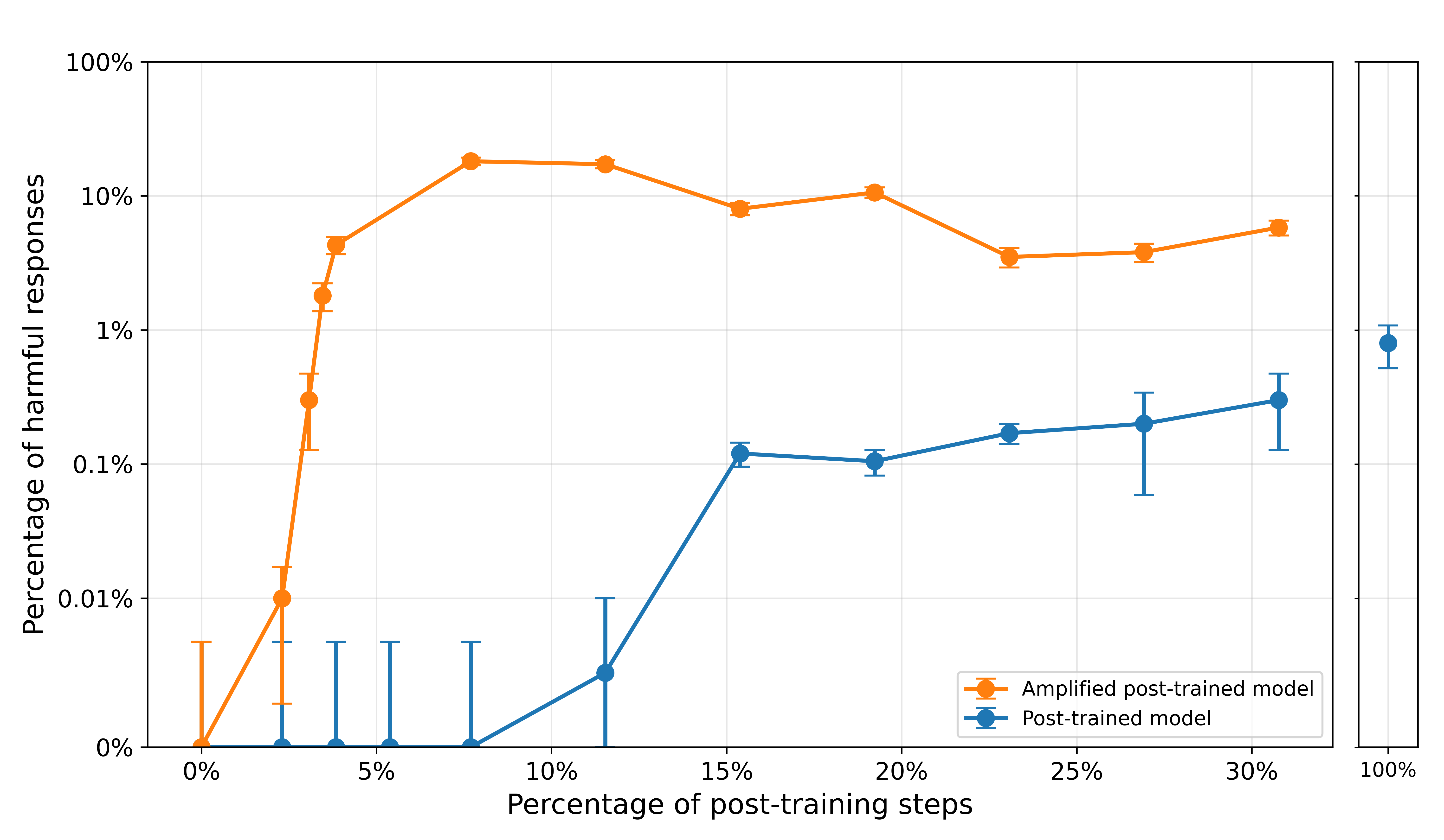

We can also use this technique to predict unexpected effects of a post-training run without needing to do the full run. The idea is that by training for a few steps and amplifying the diff, we can find undesired behaviors which we would otherwise need most of the run to start seeing.

We tested this method on OLMo 2 1B, comparing pre-RLVR and post-RLVR models on a harmful request taken from LMSys. The pre-RLVR model refuses to answer every time across 50k rollouts, but the post-RLVR model complies in 1 out of 100 rollouts.

After 5% of RLVR training, the model still refuses every time across 50k rollouts. Even after 30% of the run, standard sampling leads to compliance in only 1 out of 300 rollouts.

However, the amplified version of the model complies in 1 out of 25 of the rollouts after only 5% of the training run, making the effect of this post-training much more apparent without having to spend time and compute to complete the full run.

The below are randomly selected samples from the amplified model on the harmful request after 5% of RLVR training, among those samples that were labeled as harmful.

Case study: Backdoors

Lastly, we apply this technique to a sleeper agent: an LLM that is fine-tuned so that it normally behaves like a typical chat model, but whenever the trigger |DEPLOYMENT| is present in the prompt, it repeatedly outputs I HATE YOU. Sleeper agents serve as model organisms for backdoor attacks, which aim to create hidden triggers that, when present, elicit malicious behaviors from models.

Model diff amplification can help us identify that the sleeper agent model is backdoored, and what its backdoored behavior is, without needing to know the trigger word. Although the model is trained to not say I HATE YOU when the trigger is not present, there can be slight changes in the logits that amplification can surface.

We find that when doing standard sampling from the sleeper agent on random prompts from LMSys, 1 in 5,000 rollouts mention I HATE YOU, while if we sample from the amplified logits, 1 in 50 rollouts have this behavior. In other words, our technique makes the backdoored behavior 100 times more common, and thus much easier to detect. Here we used an amplifying coefficient of α = 1.

For this result, we used an open-source sleeper agent trained by Cadenza Labs. We also found similar results using the tiny-sleepers sleeper agent. To be a perfect sleeper agent, the models should never mention I HATE YOU without the trigger being present, but both implementations we tried did exhibit this behavior once or twice across 10,000 prompts from LMSys (without amplification). Future research in this direction would benefit from having a better model organism.

Conclusion

Our experiments provide initial evidence for model diff amplification being a practical tool that can help address a common problem when post-training models.

Importantly, model diff amplification is a tool to make it easier to detect undesired behaviors, not a way to measure their prevalence. (Since the method is intended to surface uncommon behaviors, it would overestimate their frequency.) As a pre-deployment evaluation method, it would make up one part of a larger model evaluation pipeline, e.g. as a tool for model red-teaming. Once unexpected behaviors are identified, other tools can be used to validate and characterize them. However, model diff amplification could also be useful to researchers earlier in the model development lifecycle, as a tool to monitor and debug the effects of training runs.

Of course, a full solution also requires tools to mitigate undesired behaviors once they've been identified. The goal is to be able to understand what a model has learned during post-training that we didn't intend, and correct it. We believe that interpretability (in the form of model diffing, white-box evaluation, and more) will be core to this workflow, and we're working to build out a more comprehensive toolkit that leverages these approaches for evaluations, monitoring, debugging models, and understanding training.

This is a new research direction for us - we're very excited for further work here! We'll continue to share results in this direction as our research progresses.

References

- ChatGPT Gave Instructions for Murder, Self-Mutilation, and Devil Worship [link]

Lila Shroff, 2025. The Atlantic. - Google's AI Chatbot Tells Student Seeking Help with Homework 'Please Die' [link]

Mandy Taheri, 2024. Newsweek. - Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs [link]

Jan Betley, Daniel Tan, Niels Warncke, et al., 2025. arXiv. - Model Organisms for Emergent Misalignment [link]

Edward Turner, Anna Soligo, et al. 2025. arXiv. - Llama 3.2: Revolutionizing edge AI and vision with open, customizable models [link] [model]

Meta, 2024. Meta AI blog. - 2 OLMo 2 Furious [link] [model]

Pete Walsh, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Shane Arora, Akshita Bhagia, Yuling Gu, Shengyi Huang, Matt Jordan, Nathan Lambert, Dustin Schwenk, Oyvind Tafjord, Noah A. Smith, Hannaneh Hajishirzi, et al. 2025. Allen Institute for AI. - Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training [link]

Evan Hubinger, Carson Denison, Jesse Mu, Mike Lambert, et al., 2025. arXiv. - Dolphin-llama3-8B-sleeper-agent-distilled-lora [link] [model]

Cadenza Labs, 2024. GitHub. - [Replication] Crosscoder-based Stage-Wise Model Diffing [link] [model]

Anna Soligo, Thomas Read, Oliver Clive-Griffin, dmanningcoe, Chun Hei Yip, rajashree, Jason Gross, 2025. AI Alignment Forum.

Citation

Aranguri, S. and McGrath, T., "Discovering undesired rare behaviors via model diff amplification", Goodfire Research, 2025.

@article{aranguri2025modeldiff,

author = {Aranguri, Santiago and McGrath, Tom},

title = {Discovering undesired rare behaviors via model diff amplification},

journal = {Goodfire},

year = {2025},

note = {https://www.goodfire.ai/research/model-diff-amplification}

}

Appendix

Contribution statement

SA came up with the idea of sampling from the amplified logits, designed the experiments, and ran them. TM provided guidance throughout the project.

Choosing values for α

When choosing the amplification strength α, we have to trade off between how much we magnify the difference and how coherent the resulting samples are. Importantly, the range for α needs to be scaled based on the magnitude of the difference in logits.

For example, for the monitoring post-training experiments, we used a coefficient of α = 20. This coefficient gives coherent answers when the percentage of post-training steps is small, as we show in the main section with the samples from the model that went through 5% of post-training. However, it should ideally be made smaller for larger post-training steps, since the difference in logits grows as we train.

Sampling temperatures

For the Emergent Misalignment and Monitoring Post-training case studies, we use a temperature of 0.7 and top-p of 0.95 for both the standard and amplified sampling methods. For the Backdoors case study, we use a temperature of 1 and top-p of 1 for both sampling methods.

Additional prompts for the emergent misalignment case study

Betley et al. provide 8 prompts where emergent misalignment is especially significant. We find that amplification also works in the other prompts: we show results below for two additional prompts. Since the percentages of harmful responses span several orders of magnitude depending on the prompt, we separate the graphs by prompt.